Are you an AI or machine learning (ML) provider aiming to showcase your commitment to managing AI-related risks? ISO 42001 certification is the gold standard for demonstrating a robust internal control framework, giving your customers confidence in your responsible AI practices. At Coral, we specialize in guiding AI and ML organizations through the process of obtaining ISO 42001 certification. With this certification, your customers can be rest assured that your AI risks are proactively managed throughout the development lifecycle. Our proven consulting methodology includes Scoping, AI System Impact Assessment, and establishing AI Governance Program. Take the first step towards ISO 42001 certification and elevate your organization’s credibility in the AI landscape.

Contact us today to learn how we can simplify the certification journey for you.

Summary: At this stage, the organisation has successfully implemented the baseline requirements for achieving ISO 42001 certification.

| Control Area | Control Requirements |

|---|---|

| Policies related to AI | 3 |

| Internal organization | 2 |

| Resources for AI systems | 5 |

| Assessing impacts of AI systems | 4 |

| AI system life cycle | 9 |

| Data for AI systems | 5 |

| Information for interested parties of AI systems | 4 |

| Use of AI systems | 3 |

| Third-party and customer relationships | 3 |

| Total | 38 |

Listed below are the ISO 42001 Certification Consultant Responsibilities:

At this stage, the organization would have achieved ISO 42001 certification.

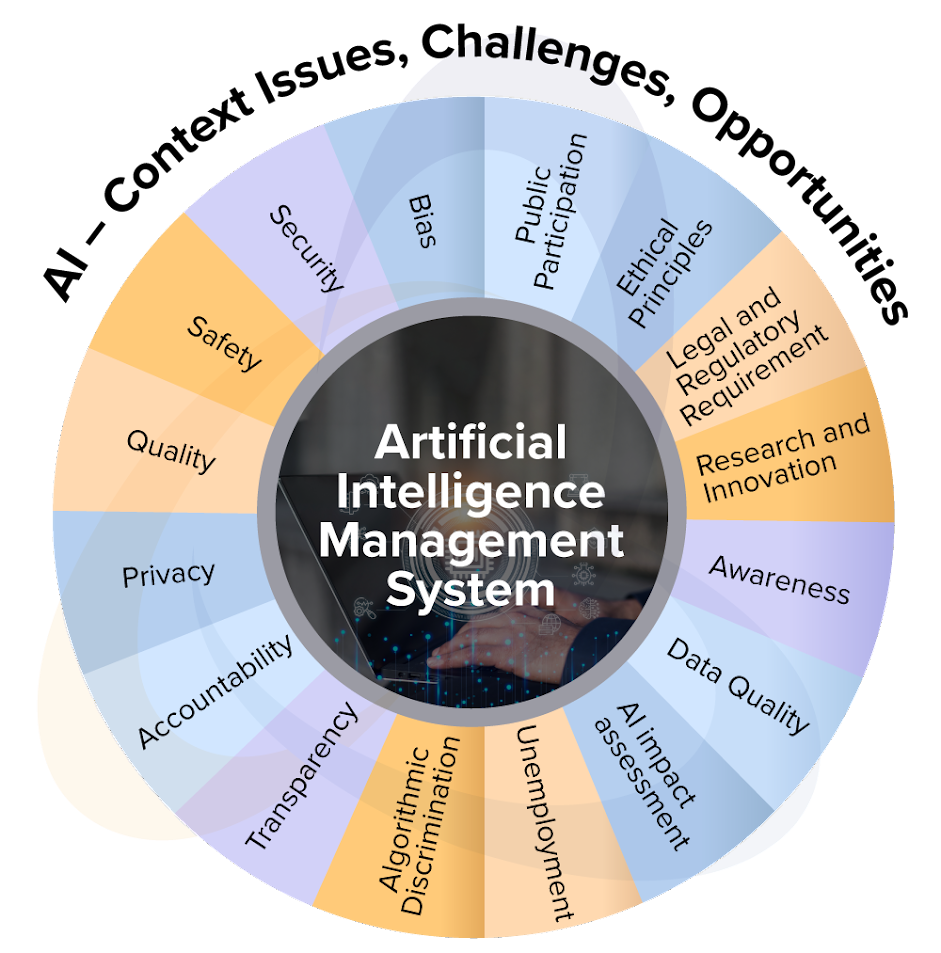

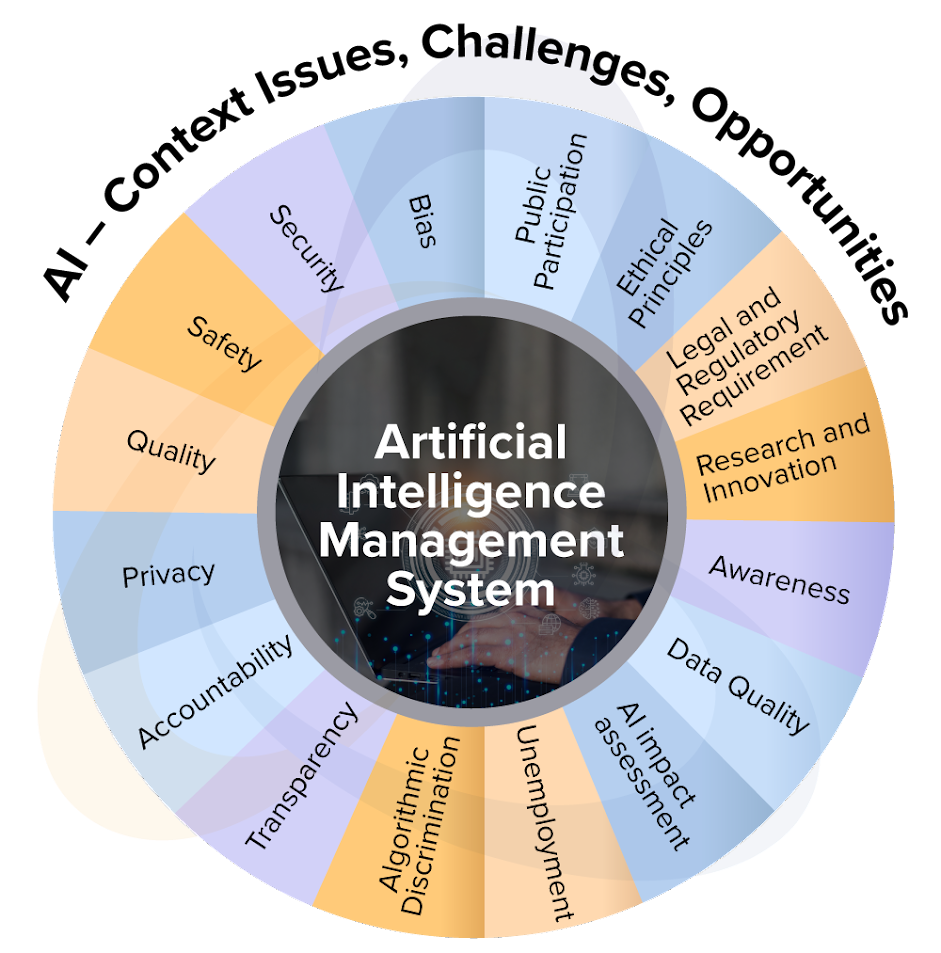

Artificial Intelligence (AI) is transforming industries worldwide, offering powerful solutions to enhance productivity, efficiency, and decision-making. However, as the adoption of AI grows, so do the complexities and ethical dilemmas surrounding its deployment. This is where a Responsible AI Consultant plays a critical role, guiding businesses to navigate the challenges and opportunities of AI ethically and sustainably.

AI systems often make decisions based on data, but this data can sometimes reflect societal biases. A responsible AI consultant ensures that AI solutions are designed to minimize biases and promote fairness. This includes reviewing datasets, algorithms, and decision-making processes to ensure inclusivity and equity. By fostering ethical AI practices, consultants help businesses avoid reputational damage and ensure their technology benefits everyone equally.

The rise of AI has led to the implementation of stringent laws and regulations, such as European Union's (EU) Artificial Intelligence (AI) Act. Non-compliance can result in hefty fines and legal repercussions. A responsible AI consultant helps organizations understand and adhere to these regulations, ensuring their AI systems operate within the legal framework.

Consumers, employees, and other stakeholders are increasingly demanding transparency in how AI systems make decisions. Without trust, businesses risk losing their competitive edge. A responsible AI consultant ensures that AI models are interpretable and explainable, fostering trust by showing stakeholders how and why decisions are made. This transparency is crucial for building long-term relationships and securing customer loyalty.

AI systems can inadvertently cause harm, such as incorrect predictions or data breaches. A responsible AI consultant identifies potential risks in AI systems and implements measures to mitigate them. This proactive approach minimizes the likelihood of harm and ensures the safe deployment of AI technologies.

AI adoption should align with a company’s mission, values, and objectives. A responsible AI consultant ensures that AI strategies support long-term business goals while upholding ethical standards. This alignment not only drives innovation but also ensures that the AI solutions deliver measurable value to the organization.

The environmental impact of AI, such as high energy consumption during data processing and training, is often overlooked. A responsible AI consultant works to optimize AI systems to reduce their carbon footprint, contributing to the organization’s sustainability goals.

AI consultants can help assist in implementing Standards such as ISO 42001 and NIST AI RMF. These standards help align an organization to define internal processes that ensure responsible AI is embedded in each process associated to AI development lifecycle.

As AI continues to evolve, its potential for both good and harm increases. A Responsible AI Consultant acts as a guide, ensuring that AI systems are not only effective but also ethical, transparent, and aligned with societal values. By addressing ethical concerns, managing risks, and promoting compliance, these consultants play a crucial role in fostering trust, innovation, and sustainability in the AI landscape.

For organizations looking to implement AI responsibly and reap its benefits while minimizing risks, partnering with a Responsible AI Consultant is no longer optional—it’s a necessity.

ISO 42001 provides organizations with a structured approach to managing the risks associated with the design, development, deployment, and use of artificial intelligence systems. Implementing the ISO 42001 offers several key benefits:

Enhanced Trustworthiness of AI Systems

Risk Identification and Mitigation

Regulatory and Legal Alignment

Cross-Organizational Collaboration

Innovation and Competitive Advantage

Flexibility and Adaptability

Stakeholder Confidence and Public Trust

By implementing ISO 42001, organizations can achieve both risk resilience and responsible AI adoption, ensuring that their AI initiatives succeed while safeguarding against potential downsides.

Rob (Customer): Thanks Carol and Jennifer for your time today.

Carol (Consultant): You are most welcome; I will share all my experiences to answer the questions.

Jennifer (CB Auditor): Absolutely, I will share my perspective as an ISO 42001 certification auditor

Rob:What is Responsible AI?

Carol:Responsible AI refers to the design, development, and deployment of AI systems that are fair, transparent, accountable, and aligned with ethical and societal values.

Jennifer:From an ISO 42001 perspective, Responsible AI aligns with risk management principles, emphasizing compliance with standards, ethical practices, and mitigating potential harms to individuals and society.

Rob:What is ISO 42001:2023?

Jennifer:ISO 42001:2023 is a global standard for managing risks in AI systems. It provides a structured framework for organizations to identify, assess, and mitigate AI risks while ensuring ethical and responsible AI practices. It has management system requirements and annexure controls. For an organization to achieve certification, they need to implement these requirements.

Rob:Why is Responsible AI practice Important to an organization?

Carol:It builds trust, reduces legal and reputational risks, ensures compliance with regulations, and aligns AI initiatives with corporate values and societal expectations.

Jennifer:Additionally, certification under ISO 42001 demonstrates accountability and commitment to responsible AI, which enhances credibility with stakeholders.

Rob:What is the role of an AI ethics consultant?

Carol:An AI ethics consultant helps organizations design ethical AI strategies, identify and mitigate biases, establish governance frameworks, and align practices with standards like ISO 42001 or NIST frameworks.

Rob:How is AI Used in risk management?

Carol:AI can analyze large datasets to predict risks, detect anomalies, and automate decision- making, enhancing speed and accuracy in risk assessments.

Jennifer:ISO 42001 ensures that while AI improves risk management, the technology itself is managed responsibly to avoid introducing new risks.

Rob:What is an AI risk assessment?

Carol:It’s a systematic process to identify potential harms, biases, and vulnerabilities in AI systems, evaluating their likelihood and impact.

Jennifer:ISO 42001 standardizes this process, ensuring it’s comprehensive and consistent across the organization.

Rob:What is AI Risk management certification for an organization?

Jennifer:Certification, such as ISO 42001, verifies that an organization’s AI risk management practices meet international standards, fostering trust and accountability.

Rob:What is the difference between the NIST AI Risk Management Framework and ISO 42001?

Carol:NIST focuses on risk assessment and mitigation through a flexible, and iterative framework. ISO 42001 extends on the same principle, it provides a governance framework, using which an organization can achieve certification. I would say an organization should consider both for implementation purposes.

Jennifer:ISO 42001 is the global certification for AI risk management practices. An organization gets certified for ISO 42001, there is no certification for NIST AI framework.

Rob:What are the pillars of Responsible AI?

Carol:Fairness, transparency, accountability, privacy, safety, inclusivity, and sustainability form the pillars of Responsible AI.

Jennifer:ISO 42001 integrates these principles into its risk management framework.

Rob:What actors should be considered when adopting Responsible AI guardrails?

Carol:Organizations should consider ethical implications, regulatory requirements, societal impact, stakeholder trust, and technological feasibility.

Jennifer:ISO 42001 provides guidelines to ensure these factors are addressed systematically.

Rob:What is necessary to mitigate risks of using AI Tools?

Carol:Effective mitigation requires robust governance, continuous risk assessment, bias audits, user education, and ethical safeguards.

Jennifer:ISO 42001 certification ensures a structured approach to these mitigations, backed by global best practices.

Rob:Thanks for all your responses. With so many benefits in place, let’s get started with our ISO 42001 implementation.

Carol:Absolutely.

Jennifer:When you are ready, let us know, we will start the certification process.

© 2025 www.coralesecure.com. All rights reserved | Privacy Policy